Adaptive Stretching of Representations Across Brain Regions and Deep Learning Model Layers

Deep Learning Medical ImagingPosted by mhb on 2025-12-03 04:07:31 |

Share: Facebook | Twitter | Whatsapp | Linkedin Visits: 60

Introduction

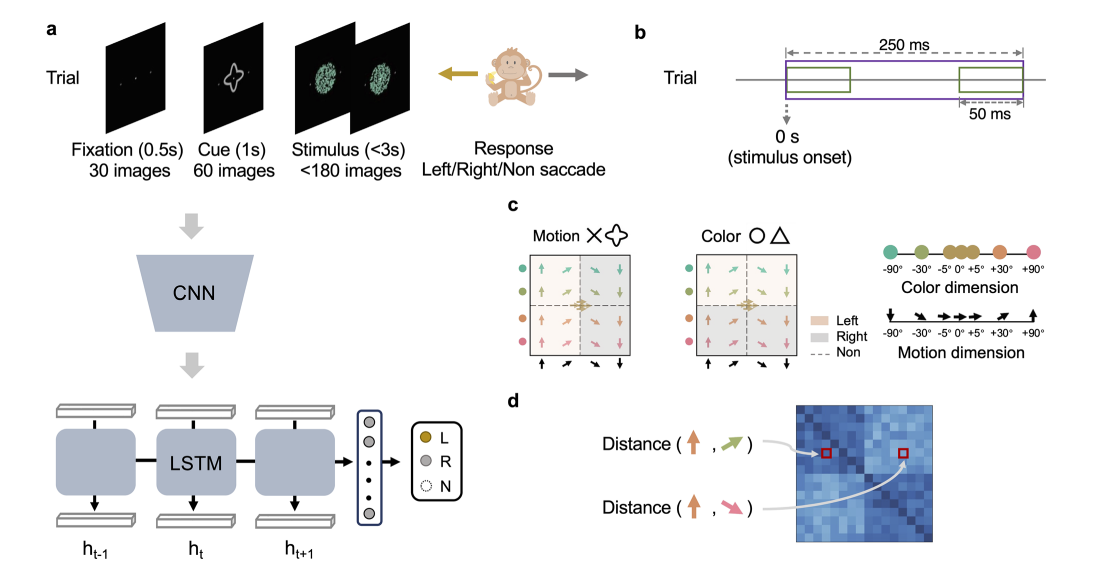

The human brain constantly adapts to focus on task-relevant information. This study explores how different brain regions stretch neural representations depending on whether a task requires attention to color or motion. Using data from monkeys performing a flexible decision-making task and a deep learning model trained on the same input, the researchers show that both biological and artificial systems reshape internal representations to optimize performance. This sheds light on how attention, learning, and neural coding work at a systems level.

Methods

-

Subjects: Two rhesus monkeys performing color-vs-motion decisions.

-

Recordings: Multi-site spiking activity from PFC, FEF, LIP, IT, MT, V4.

-

Analysis tool: Representational Similarity Analysis (RSA).

-

Model: CNN-LSTM trained on the exact same stimuli (same sequence of images).

-

Metrics: Spike timing (ISI, SPIKE), rate coding, model-based attention weights.

-

Goal: Compare neural representations with model representations to see how attention changes internal structure.

Results

1. Spike timing matters.

Spike timing–based measures (especially ISI distance) match the intended stimulus structure better than rate coding.

2. The brain stretches representations.

All brain regions show greater dissimilarity between stimuli that differ on the task-relevant dimension (color or motion).

-

Strong in: PFC, FEF, LIP

-

Moderate but still present: V4 (color), MT (motion).

3. The deep learning model behaves the same way.

The CNN-LSTM also stretches its internal representations along task-relevant dimensions even without explicit attention mechanisms.

4. Biological vs. artificial flexibility differs.

-

The LSTM can completely reconfigure itself.

-

Biological areas (especially MT, V4) remain partially modality-bound.

Discussion

This study shows that both brains and deep learning systems use adaptive stretching as a natural strategy to enhance task-relevant distinctions. The findings highlight:

-

The importance of spike timing in cognitive tasks.

-

That top-down control may emerge naturally from error-driven learning.

-

The brain’s mixture of flexibility (PFC) and specialization (V4, MT).

-

A deep neural network can mimic these behaviors without explicit attention modules.

Conclusion

The research demonstrates that adaptive stretching of task-relevant information is a fundamental mechanism shared by biological neural circuits and deep learning networks. The brain dynamically reconfigures representations to optimize performance, and deep models learn similar strategies. This bridges neuroscience and AI, offering new insights into attention, learning, and neural coding.

Search

Categories

Recent News

- Clinical application research on the titanium metal metatarsal prosthesis designed through FEA and manufactured by 3D printing

- Manually weighted taxonomy classifiers improve species-specific rumen microbiome analysis compared to unweighted or average weighted taxonomy classifiers

- A deep learning based smartphone application for early detection of nasopharyngeal carcinoma using endoscopic images

- sCIN: a contrastive learning framework for single-cell multi-omics data integration

- CustOmics: A versatile deep-learning based strategy for multi-omics integration

- Tracking temporal progression of benign bone tumors through X-ray based detection and segmentation

- mCNN-GenEfflux: enhanced predicting Efflux protein and their super families by using generative proteins combined with multiple windows convolution neural networks

- Evaluation of normalized T1 signal intensity obtained using an automated segmentation model in lower leg MRI as a potential imaging biomarker in Charcot– Marie–Tooth disease type 1A

Popular Paper

- sCIN: a contrastive learning framework for single-cell multi-omics data integration

- CustOmics: A versatile deep-learning based strategy for multi-omics integration

- A deep learning based smartphone application for early detection of nasopharyngeal carcinoma using endoscopic images

- Clinical application research on the titanium metal metatarsal prosthesis designed through FEA and manufactured by 3D printing

- Tracking temporal progression of benign bone tumors through X-ray based detection and segmentation